Groundhog Day with SMB remount

A customer complained about network problems. An SMB/CIFS mount would always break down between two of his servers. Not that there were any noticeable problems, but there are still some error messages in the log.

Setup

The customer has a certain number of servers in his Layer2 segment. One of them is a Windows file server for a group of Windows computers. Another is the Linux monitoring system, which regularly tries to access the share.

The whole thing has a longer history, which led half a year ago to the recommendation to abandon SMB1. This part is not relevant, it is what followed the recommendation.

Since the transition, the monitoring Linux has had strange entries in the kernel log:

Feb 12 19:07:02 kernel: CIFS VFS: Server a.b.c.d has not responded in 120 seconds. Reconnecting...

Where do these 120 seconds come from? The man page does help:

echo_interval=n sets the interval at which echo requests are sent to the server on an idling connection. This setting also affects the time required for a connection to an unresponsive server to timeout. Here n is the echo interval in seconds. The reconnection happens at twice the value of the echo_interval set for an unresponsive server. If this option is not given then the default value of 60 seconds is used.

That explains the 120 seconds: Every minute an SMB echo is sent and if no echo is answered twice, the connection is believed to be dead. Therefore, the customer (understandably) thinks that there would be packet loss in the network.

To prove this assumption wrong takes weeks. For this purpose, the data traffic is recorded on both sides and put next to each other packet by packet.

Unfortunately, this still doesn't solve the real problem.

Analysis

Again and again such a recording is examined, in order to discover any unusual phenomenon.

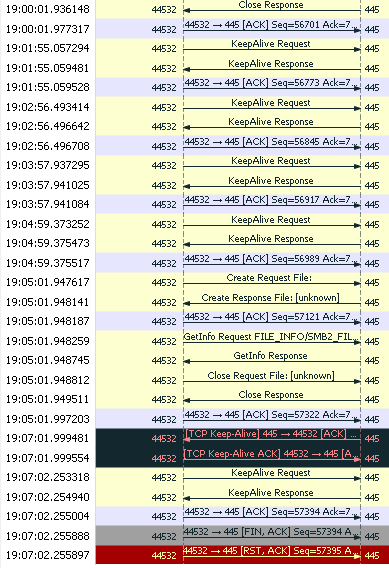

It's awesome to see each minute the keep-alive request-response game is happening. Then data is sent.

The communication comes to a sudden pause and the time-out stated in the log occurs. Between 19:05:01.99... and 19:07:02.25... there are a little more than 120 seconds. So far that seems to fit.

The transmission error (the one with the TCP keep-alive) is remarkable, because it hits the server directly before the connection is terminated. This must be investigated!

Why are the lines black? Because the keep-alive sends exactly one byte over again, which has been acked for a long time. It is a very unusual conversation. Wireshark colours such sequence errors in black.

- At 19:05:01.949511 128 bytes are received.

- At 19:05:01.997203 the reception of these 128 bytes is confirmed.

- At 19:07:01.999481 the final of the 128 bytes is transmitted again (together with the keep-alive flag).

If the acknowledgement of the data reception (ACK) had not arrived, the entire 128 bytes would be sent again. But they are not.

Did a firewall gamble on the sequence numbers underway? But there is no firewall in between, both servers are in the same LAN and see each other directly. But the other side verifies that the acknowledgement has arrived completely.

So why should the kernel resend a single byte? It turns out that the Windows implementation of the TCP keepalive sends exactly one byte again, while the Linux implementation sends the keepalive without payload. This is apparently an adjustment of Windows to broken middleware, which drops TCP packets without payload.

So the part is fine. But what is it then?

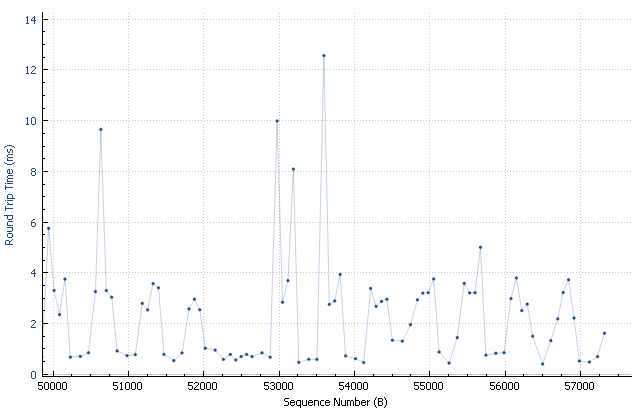

Maybe there are some delays somewhere? So let's take a look at the round-trip times. Maybe there are outliers.

There are no particular irregularities with the remote location. Everything looks nice.

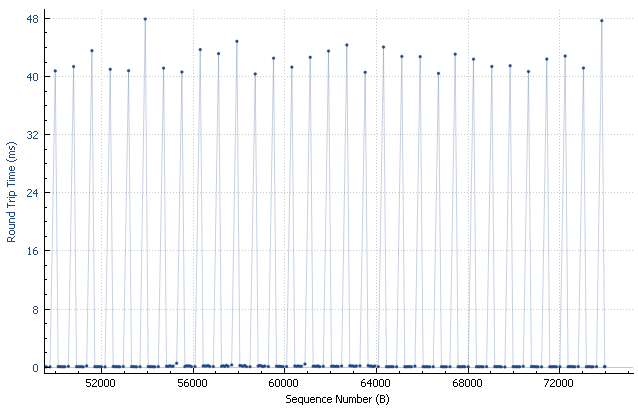

Even the local processing is completely inconspicuous: Everything that the kernel can process directly is done in almost no time. If the application software is involved, it takes longer. One can see very clearly how regular the measurements are.

Nothing is striking here either. But what is it then?

A closer look reveals that an echo request should have been sent at 19:06:00. But that is missing!

Kernel Archaeology

The function cifs_echo_request is responsible for sending the echo requests in the Linux kernel. There it says:

static void

cifs_echo_request(struct work_struct *work)

{

int rc;

struct TCP_Server_Info *server = container_of(work,

struct TCP_Server_Info, echo.work);

unsigned long echo_interval;

/*

* If we need to renegotiate, set echo interval to zero to

* immediately call echo service where we can renegotiate.

*/

if (server->tcpStatus == CifsNeedNegotiate)

echo_interval = 0;

else

echo_interval = server->echo_interval;

/*

* We cannot send an echo if it is disabled.

* Also, no need to ping if we got a response recently.

*/

if (server->tcpStatus == CifsNeedReconnect ||

server->tcpStatus == CifsExiting ||

server->tcpStatus == CifsNew ||

(server->ops->can_echo && !server->ops->can_echo(server)) ||

time_before(jiffies, server->lstrp + echo_interval - HZ))

goto requeue_echo;

rc = server->ops->echo ? server->ops->echo(server) : -ENOSYS;

if (rc)

cifs_dbg(FYI, "Unable to send echo request to server: %s\n",

server->hostname);

requeue_echo:

queue_delayed_work(cifsiod_wq, &server->echo, server->echo_interval);

}The code is interesting in several ways:

- On the one hand, an echo is sent only if there exists a real need to do so.

- On the other hand, this is a daisy chain scheduling: Only after work has been done the next action is planned.

As far as the needs are concerned, no echoes are sent out as long as the last response was received earlier than one echo interval.

But what is a response? In the code, the server→lstrp is always set to the current time whenever a packet is received. Regardless wether it is an echo response or normal data.

Subtracting HZ (one second) is a countermeasure preventing the echo response to your own request from being interpreted as interesting traffic. So this hard-coded value is calculated assuming that the answer from the remote station comes always more quickly than in a second.

If such an SMB connection runs over a longer distance or against a slower server, every second echo request will be suppressed if no further traffic occurs. This directly leads to the fact that SMB only works in the LAN.

Due to the protocol architecture, all requests are processed sequentially. If an echo request is sent while another request is running on the server, the echo request will not be processed until the previous request has been completed. Asynchronous processing was abandoned with the transition from SMB1 to SMB2. Now the server should tell the client that a more complex request is still being processed.

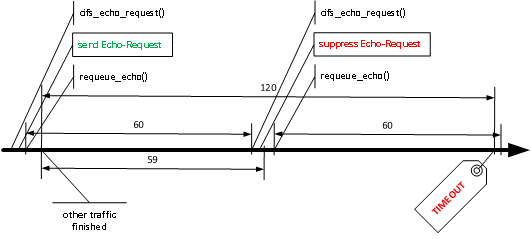

In this case an answer was received at 19:05:01.91... from the server. The echo packet should have been sent around 19:06:00. That's a very precise mistake!

The second problem is the scheduling. Only when all the work has been done (sending the echo packet) the next echo is scheduled to be sent 60 seconds later. The entire processing time is missing from the planning. This means that the echo packets are not sent exactly after 60 seconds, but always a bit later.

The recording shows this very clearly: 19:01:55.05, 19:02:56.49, 19:03:57.94, 19:04:59.37, 19:06:00.90, 19:07:02.27. The intervals are about 61.5 seconds. So 1.5 seconds more than planned. In the last step the interval is shorter, because the echo packet was not sent.

Racecondition

But what really happens is explained quickly: Suppressing the planned echo request is faulty.

In detail:

- Daisy chaining scheduling creates a gap.

- If traffic comes unfortunate shortly after the last echo, the planned echo is suppressed.

- Due to the gap, the next echo transmission is delayed until after the timeout, which is set hard to twice the echo interval.

It is particularly charming to realize that the final echo request is first sent and then the time-out strikes, because the first action when receiving the echo response is to check for a time-out and terminate it. Ironically, the successful echo triggered the abort.

Now the question arises since when the problem occurred and who is responsible for it:

- The patch c740 will send permanently echo requests every 60 seconds if no other traffic occurred. (Jan 11, 2011)

- The patch fda3 all these echoes are used to trigger a timeout at five times the interval (configurable) without traffic. (Jan 20, 2011)

- The patch 6dae switches from the configurable 60s x variable(5) to variable(60s) x two. (Feb 21, 2012)

The gap and suppression was introduced on 11 January 2011. However, this has no effect, as the time-out starts at five times the interval.

With the changeover on 21 February 2012, the gap now takes effect, as the time-out was set hard to twice the interval.

Solutions

There are three options.

First, eliminate the gap by scheduling at fixed times (always add 60 seconds to the last schedule time). By the way, it is not enough to move the rescheduling to the beginning of the routine. This only shortens the gap, but it will not disappear.

Another possibility is to send the echo requests in any case regardless of other traffic. In other words, if traffic is already running, the additional request will not bother any more. There is a potential risk that a server will choke on an echo between other requests.

Furthermore, avoiding the entire problem by waiting at least three times the interval length seems plausible.

If you want a quick fix, you should choose the third approach: Change a 2 to a 3 in the code:

static bool

server_unresponsive(struct TCP_Server_Info *server)

{

/*

* We need to wait 2 echo intervals to make sure we handle such

* situations right:

* 1s client sends a normal SMB request

* 2s client gets a response

* 30s echo workqueue job pops, and decides we got a response recently

* and don't need to send another

* ...

* 65s kernel_recvmsg times out, and we see that we haven't gotten

* a response in >60s.

*/

if ((server->tcpStatus == CifsGood ||

server->tcpStatus == CifsNeedNegotiate) &&

time_after(jiffies, server->lstrp + 2 * server->echo_interval)) {

cifs_dbg(VFS, "Server %s has not responded in %lu seconds. Reconnecting...\n",

server->hostname, (2 * server->echo_interval) / HZ);

cifs_reconnect(server);

wake_up(&server->response_q);

return true;

}

return false;

}All that remains is the question of the TCP keepalive. Windows will wait exactly 120s until it sends a keepalive on a TCP session. Note that the Windows server sends the TCP keepalive first, because it transmitted the last packet before it arrived at Linux.

Acknowledgement

The entire analysis was done by my colleague Jens, who just asked me to write things together. This is what I did here.

The only thing left to do now is to send a bug report to the right place.

Total 5 comments