Pimp my MPD

In order to terminate a large number of broadband customers on an LNS, MPD on FreeBSD was recommended to me. There seems to exists a group of Russian operators using this software for really large deployments. Alternativly OpenL2TP could be used. However OpenL2TP does handle all the PPP sessions in user space, while MPD relies on NetGraph.

First contact

The setup is simple, even through the documentation of MPD lacks expressivness. What a command does, is documented at a single point at most. If you do not know where to look, you're stuck.

Additionaly MPD strictly distinguishes between the layers of operation. So you can only configure something at the place (and find documentation) in which this setting is relevant. I.e. vanJacobson compression occurs in the IPCP part of (PPP) bundles. Protocol field compression as part of the PPP session is negotiated in the link layer, which is responsible for setting up PPP. Therefore, all such settings are on this layer—even the authentication protocols to be used by LCP.

The configuration file is strictly linear. Each command can change the current layer. Thus the following commands might be executed in a different context. I can recommend to create the configuration manually (online) and enable "log +console".

Disillusionment

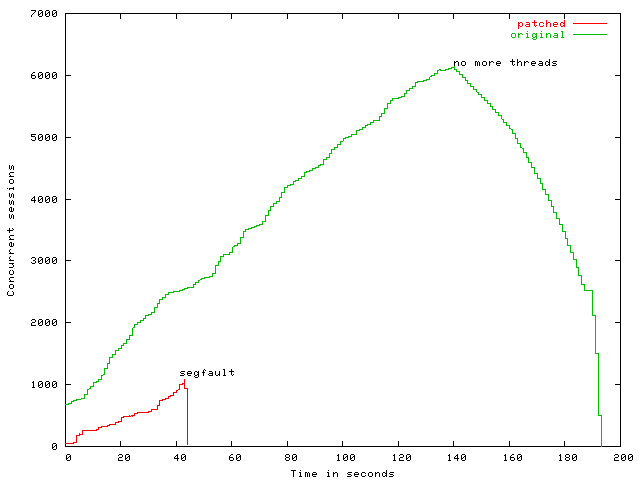

First experiments turned into pure horror.

After a quite funny start "no more threads" and "Fatal message queue overflow!" messages were logged. The MPD crashed. But it crashed controlled, so finished all sessions before terminating itself.

The event handling for radius requests caused the problems. MPD implemented event queuing by reading and writing an internal pipe. This pipe correlated to a ring buffer containing the real event information. In order to prevent blocking writes to the pipe, no more bytes (dummy values for "there's an event") should be written beside those that fit into the kernel buffer.

The obvious patch is to increase the pipe size and extend the ring buffer. But I did not make any success: it got a segfault.

Since each event on each layer generates several further events, a event flood can happen. But the single thread for event processing must not block! If any potentional blocking may occur, a new thread is spawned and handled asynchonly. So RADIUS authenication and accounting exceeded the limit of 5000 threads per process. On the RADIUS server, the load looked similar.

A carrier experienced during my tests, how badly RADIUS servers may react. The commercial LAC queries the RADIUS on every login attempt to determine the appropriate LNS for the realm. During a simultanious drop of all sessions, he was overwhelmed by so many requests that his radius servers—specifically the database backend—gave up and the entire DSL infrastructure quit the service. Meanwhile, the realm to LNS mapping is a static configuration.

Slowly, more slowly

It is always better to be kind to its suppliers, particularly to the radius servers. So I droppedthe entire event handling of the MPD and wrote it from scratch using mesg_queue. The required library was already used by MPD.

A distinction is made for "sequential" and "parallel" events. Sequential events run under the global lock the MPD. They keep serialized in the same order in which they were generated. Thus, the layers do not get confused, and event processing can rely on the previous actions. Parallel processing is used for external communication. They are handeled by a fixed number of active worker threads.

The length of both queues determine MPD's overload (Load = 2×queue_len(serial) + 10×queue_len(parallel)). An overloaded MPD does refuse new connections.

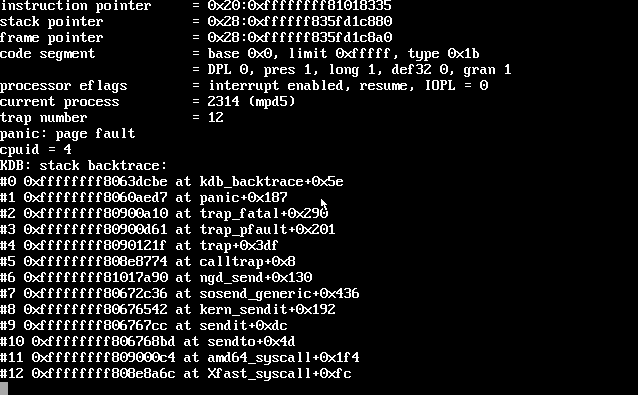

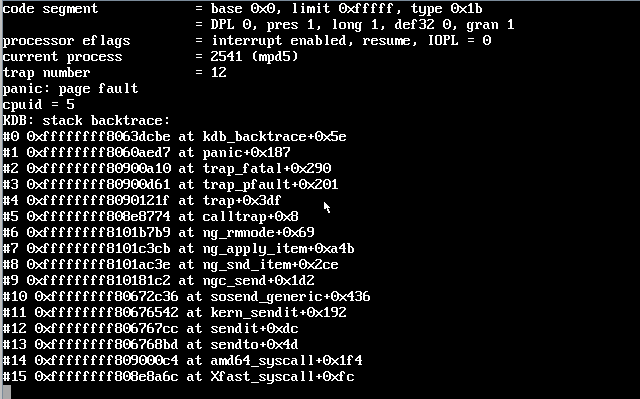

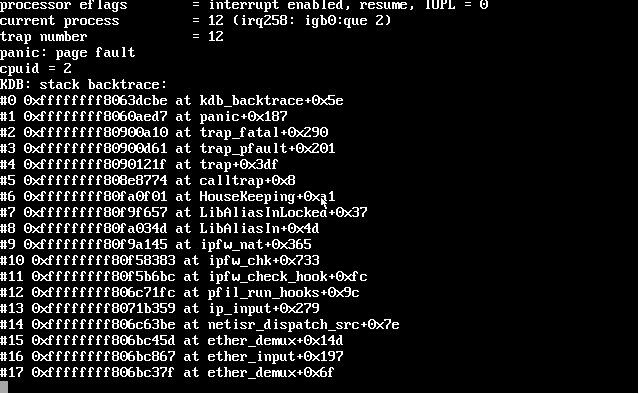

Well equipped, I was full of hope and suddenly disappointed. The kernel paniced: deep, deep inside the NetGraph code.

And not just once, but also at a completely different place.

Is the netgraph code broken? Does the kernel has problems with rapid creation and deletion of interfaces? Do I work too fast?

Even RADIUS accounting created problems, because a test equipment managed to connect, drop, and reconnect three times within one second. My SQL unique constraints refused to accept such data. The MPD is definitely too fast! I added a pause of 20ms (configurable) between the processing of two serial events.

My thread for the serial processing runs separately from the main thread, which was not the case in the original code. I put a separate lock to each NetGraph call. This prevents concurrent access on NetGraph sockets.

So the system started to behave stable.

Stress testing

I choosed an OpenL2TP on Linux for generating lots of sessions:

- Open a random number of L2TP tunnels.

- Create up to 9000 PPP sessions (round robin over the L2TP tunnels) as fast as possible.

- Create new PPP sessions at a limited rate up to 9000 sessions are established.

- Drop and recreate a random selection of 300 sesssion to simulate a living broadband environment.

- Stay a while and finally drop the sessions one after the other.

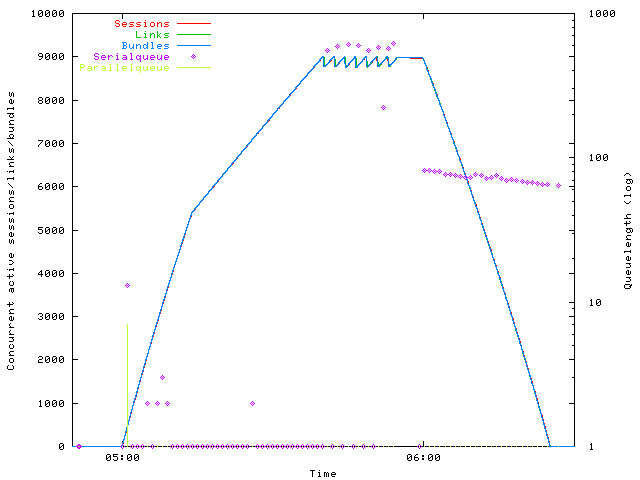

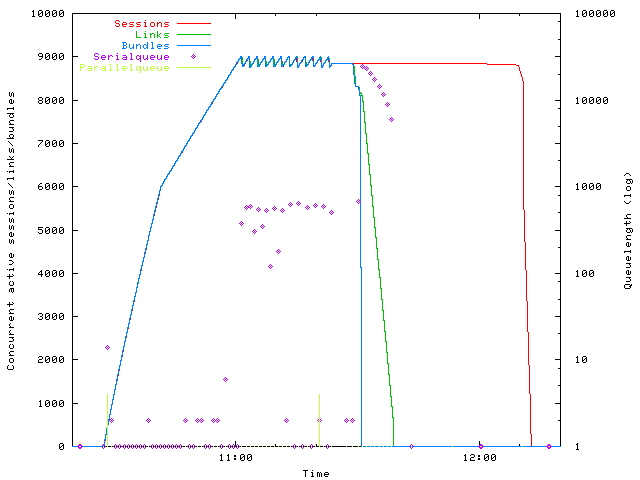

A typical test result is given below: "Sessions" are generated by OpenL2TP/pppd, each of them make MPD "Links" within the tunnels, and negotiate the PPP-"Bundles". Queue lengths are plotted too.

The initial length of the parallel queue is large, then the overload functionality throttles the connection rate. The plots for sessions, links and bundles are practically identical. There are no differences, because only successful sessions are count.

It is also nice to see how high the serial queue jumps when interface disappear: The original MPD is definitely susceptible to queue overrun. If this effect occurs, the original MPD drops all connections and stops. An unacceptable situation.

The FreeBSD box with MPD runs at a maximum load of 3 and requires 280 MB of RAM. The Linux machine generates a load of 20 during connection setup and a load of 50 on connection release. The memory required on the Linux side is about 2 GB to hold the many pppd instances.

Touch the limits

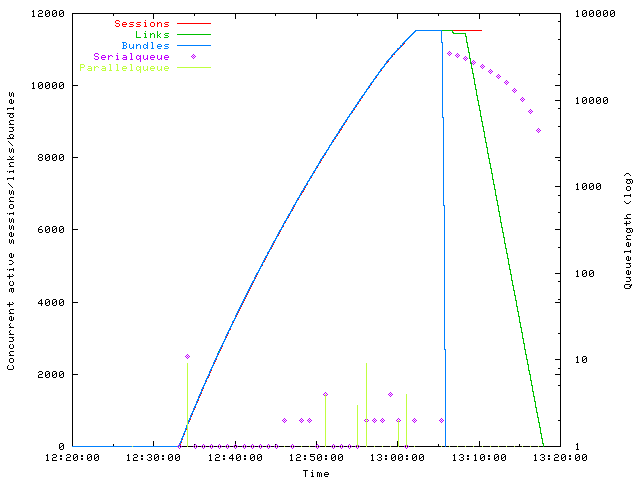

Next test simulates a interconnection breakdown: Instead of terminating pppd instances, let's drop the L2TP tunnels.

Clearly bundles, as the highest layer, are removed first. Then the associated L2TP links go down. The load on BSD box rises up to 6.

On Linux side a disaster starts: All pppd instances compete for the scheduler in order to stop working. The load rises to over 700, and the machine will not calm down. A courageous "killall -9 pppd" redeemed the machine after half an hour.

But how far can we go? More than 10000? More than 20000? Let's try it out:

The setup rises rapidly to 11,500 sessions and stopped spontaneously. On the Linux side OpenL2TP reports erroneous "RPC" parameters: It reached the limits of its implementation. The machine gets tangled and needs to be rebooted. The BSD box is fine.

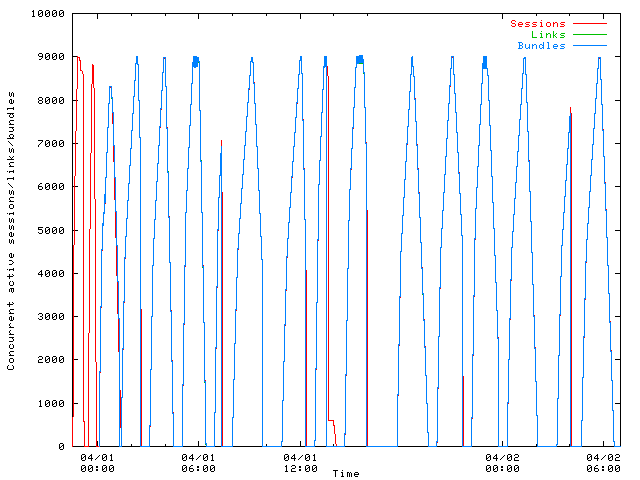

And now several times in succession:

Up and down, again and again. Sometimes a crash by my own stupidity, but it does!

Going live

In the production environment, it works surprisingly quiet. Nevertheless, the system keeps crashing:

All crashes are now only located in the libalias module. Although the code was moved to svn-HEAD … Read more.

But there are further changes in the MPD code:

- In order to replace the code in production quickly, the MPD quit itself when there are no more sessions open. The configuration option is called "delayed-one-shot". So MPD needs now to be called in a loop (/usr/local/etc/rc.d/mpd5 contains command="/usr/local/sbin/${name}.loop")

$ cat /usr/local/sbin/mpd5.loop #! /usr/local/bin/bash nohup /usr/local/bin/bash -c " cd / while true; do /usr/local/sbin/mpd5 -k -p /var/run/mpd5.pid -O sleep 5 done " >/dev/null 2>/dev/null </dev/null &

- The RADIUS accounting reports only a limited set of termination cause codes, but I do need the complete error message:

ATTRIBUTE mpd-term-cause 23 string

- Relevant system logging (interfaces come and go, users log on and off) is now activated permanently, not only for debugging. Similarly, errors are always relevant. Otherwise, the server would run blind.

Oh, and the patch: Here you are. Please feel free to ask for commercial Support.

Total 2 comments