DNS Dampening under the microscope

First results draw the attention of other DNS operators. I was urged to bring the patch (bind-9.9.2-dampening.patch) into a presentable form. Using this patch, real world measurements can be done.

Queue or Heap

I was most interested in facts supporting the heap data structure or prefering a queue/hash approach. My co-worker Jens insist in choosing a constant time access algorithm over the possibility to catch the worst case of the heap search. Of course, a hash is the natural choice for searching. Decay managment can be done using a queuing strategy.

My main assumption for selecting a heap sorted by penalty points is, that the most frequent queriers are located in front of the heap. Searching an querier IP address is therfore implemented by linear searching through the heap array. Because new entries are added at the end of the array, searching for those entries causes a full scan of all entries. On the other hand the very last element in the array is likely to have a very low penalty and can be overridden by new, currently unknown addresses. By decaying the first, the last and a random element on each update, a regular full maintainence can be avoided.

Jens' proposal is to use a hash table to search the element. Collisions can be implemented by a single linked list. In order to manage the decay of penalties, all elements are doubly linked into a queue. Every time an element is updated, it is moved to the rear of the queue. So in front of the queue the oldest element can be found. If the table is full, this element can be overwritten by the new, currently unknown address. This structure avoids a regular full maintainence.

I was faced with two approaches to sort the list of collisions. It is possible to keep the list sorted by IP address, in order to speed up the unsuccessful lookup. The other idea is to move the least recently used element into the front. Both variants were implemented and measured in a real world environment. Sorting by least recent use is strictly faster, so I sticked with it.

Interestingly the initially choosen heap function was not stable. Identical address could get different hash values. The same effect occured by natively applying memcmp to the structure isc_netaddr_t. Same addresses could be considered less or greater. The structure contains non-interesting elements which might be modified or uninitialized between different calls. That's why I wrote my own hash function as a variation of Adler16.

Real world performance

How do those algorithms work in practice?

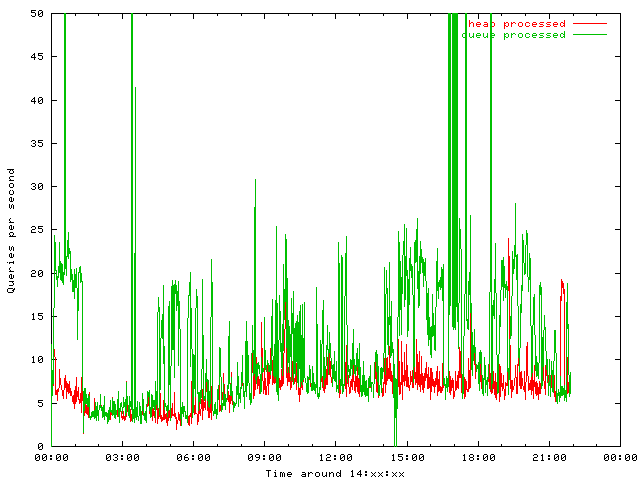

All DNS queries are put into both algorithms in parallel in order to collect statistics. I do expect the same external behavior for both but was really surprised:

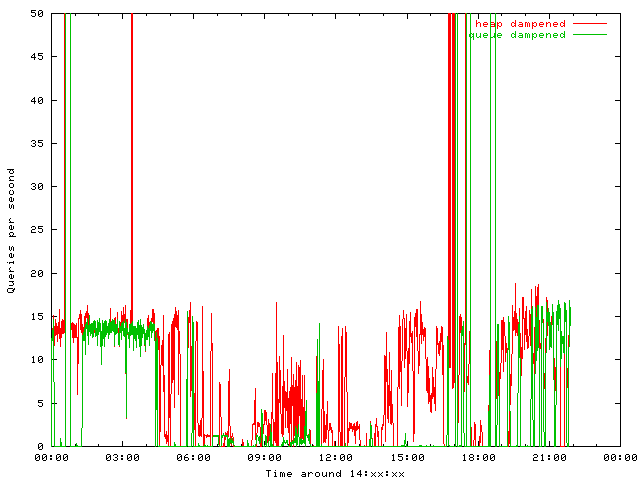

The DNS server responds to more packets when using the queue, so the heap is much more restrictive than the queue implementation. Obviously the heap sends more packets into dampening:

Both graphs are limited to 50 qps. Peak values are about a few thousend at 3 am and two magnitutes more at 17 pm. I do not have a fact based theory for this behaviour, but I believe that the heap does keep victim addresses attacked in larger intervals longer than the queue. Such long pause might cause the old entry to become overwritten.

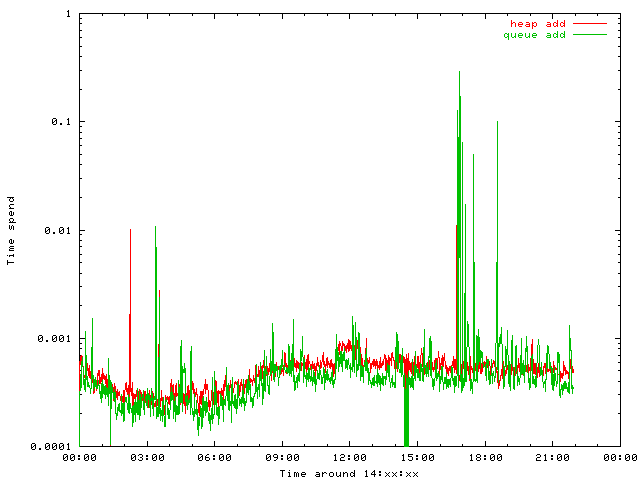

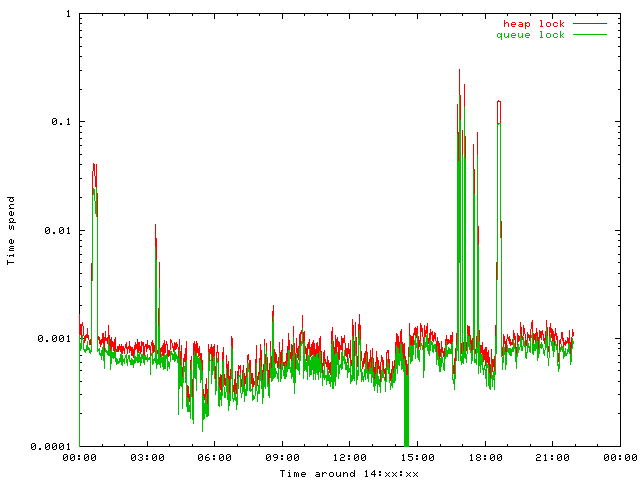

But I was interested in timing issues. Both algorithms are implemented without performance tricks, a lot of time is spend in checking the constraints to find programming errors. Nevertheless aggregating the (micro)seconds spend per minute are instructive.

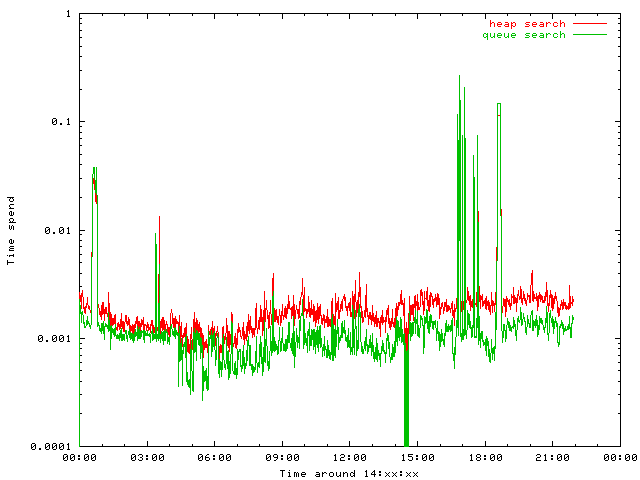

The most prominent question: Dies the hash really perform better then searching the heap?

Of course, it does. Without the special case of migitating a massive attack, the hash performs better.

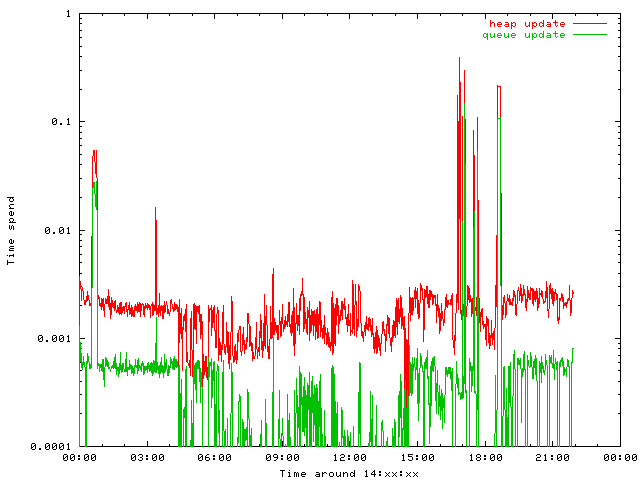

Updating a heap entry requires three updates and rebalancing. Updating a queue entry requires some link modifications to move the entry to the rear of the queue.

The heap performs as bad as expected.

Adding new entries is much less predictable The heap requires only the modification of the very last element, while queue as well as hash collision lists need to be updated. Futhermore a free space list needs to be maintained for the queue.

Both implementations are neary equal. The absolute timeing values are play at irrelevant low level.

Often forgotten but relevant is multitheadring. Both approaches can't deal with parallel access, so the require a "global" lock, which costs time too.

Still on low level, both algorithms block efficient query processing within bind. Because the heap implementation is called first, the worker threads are preordered when applying the queue. Trying a multithead aware data structure might be a really interesting project.

Conclusion: The heap is more efficient, but the queue performs better. On FreeBSD the heap implementation causes segfaults for still unknown reasons. The queue is working without any problems.

Inside attacks

The next aspect to consider is the structure of DNS amplification or reflection attacks, in order to compare dampening with response rate limiting.

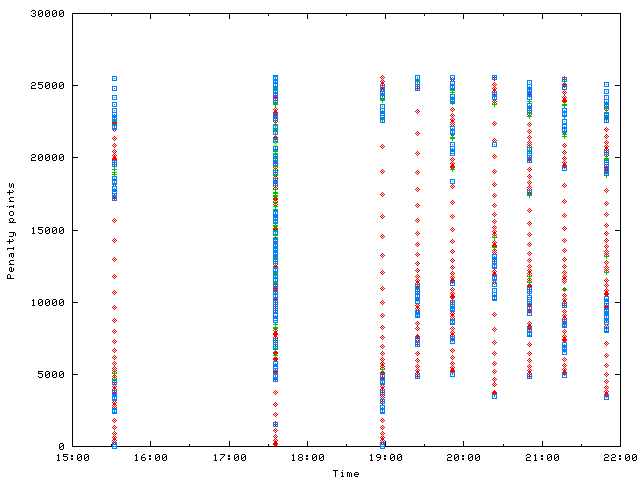

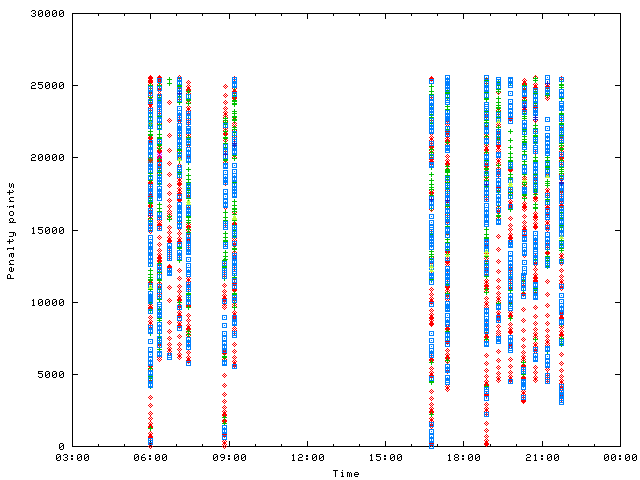

A typical attack to a given IP looks as follows. I use different colors and forms to denote different query names.

The attack collects a lot of penalty points within a few seconds and get dampened. After the a given time the decay reaches the lower limit and the address starts to collect new penalties. During 7pm and midnight the attacks are reallowed every twenty minutes for a few seconds. The default value of the halftime is ten minutes. So within twenty minutes the penalty is decayed to a quarter, sufficent low to switching back to normal.

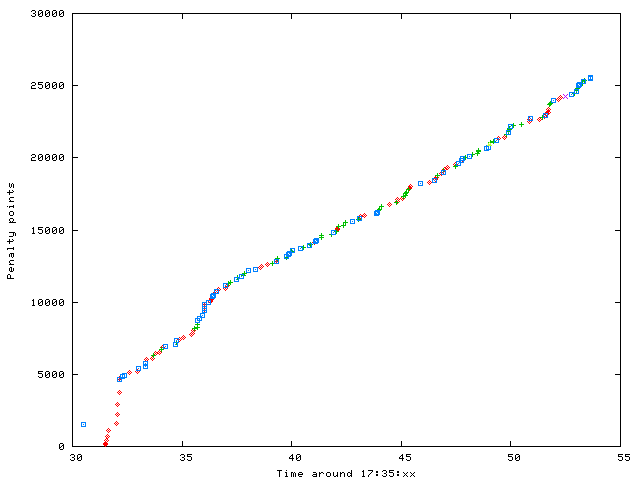

Between the red dots there are large and increasing gaps, a consequence of a sequence of queries with identical query ids. But what happens at 17:35?

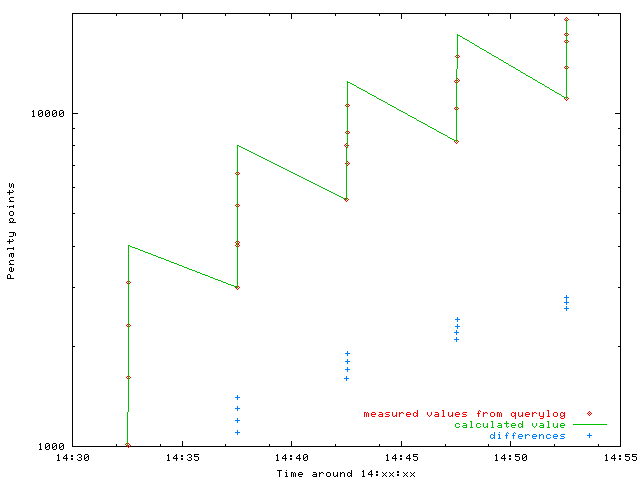

The first "high" value is the remaining penalty before applying the decay. For efficiency the heap should not be modified by each lookup. Otherwise the heap structure needs to be modified. That's why each search respond with the old penalty and gets updated afterwards.

The quick escalation of penalty by repeating the same query id over and over again is disturbed. Various other domain queries come in in parallel and confuse the algorithm. It is not longer able to detect repeated IDs. This kind of penalty becomes useless.

A deeper look to the queries reveal, that they conform the default values of the response rate limiting patch. No query is repeated more than ten times per second for the same query name. But a lot of query names are used.

This observation is not a special case, but occurs to other addresses, too:

conclusion: Attackers are aware of the response rate limiting patch and prefer DNS servers hosting a lot of (signed) zones. They try to circumvent rate limiting by distributing the query names broadly. For operators of DNS servers hosting many zones, the dampening might suit better. If the server has only a few zones, like a TLD, the rate limiting seems to be sufficient.

Blowing up

Many DNS operators monitor their systems. Many of them use an external SmokePing host. Usually the monitoring systems are listed in the exempt-client ACL. Usually.

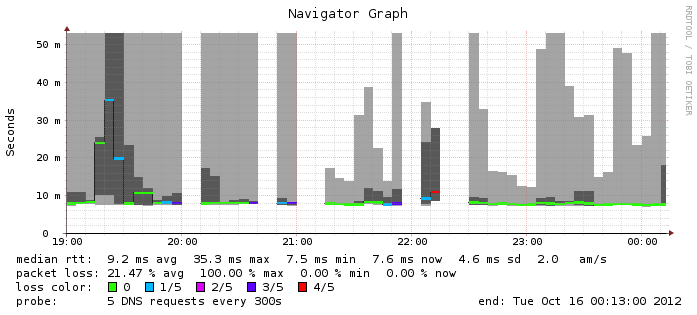

But let's look at the SmokePing of an external server which is not white listed.

The new version of bind (with dampening) was applied at 8pm. After that several complete outages occur. On the other hand the overall performance is improved. The outages are due to dampening. The performance increase is most probably a consequence of the more current version.

But why does a system gets dampened by sending five queries every five minutes?

This graph shows how the five queries collect a huge penalty. The decay does work (parallel lines due to logarithmic scale) but is not enough to keep the penalty low enough. Please note the correction by associating the returned penalty to the time of the last update.

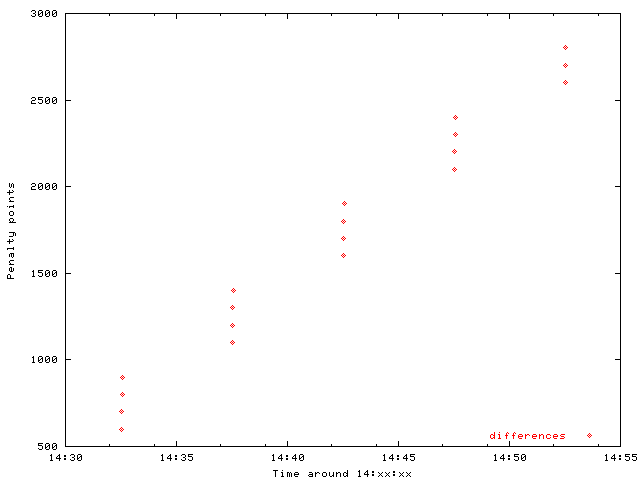

The differences of the penalty points reveal the truth:

Penalty points grow linenar per query. The added amount to the difference is 100, the value for duplicate query ids. Tcpdump tells us, that indeed SmokePing is using the same query id. The used probe is AnotherDNS. Let's have a look into the source:

my $packet = Net::DNS::Packet->new( $lookuphost, $recordtype )->data;

my $sock = IO::Socket::INET->new(

"PeerAddr" => $host,

"PeerPort" => $port,

"Proto" => "udp",

);

[...]

for ( my $run = 0 ; $run < $self->pings($target) ; $run++ ) {

[...]

$sock->send($packet);They generate a wire format of a DNS query and send it out repeatatly using the same UDP socket. Thats why the packet has the same query id as well as the same source port.

Net::DNS generates a new packet with a random id. If this code is moved to another perl script, it emits five identical query, but different ones on each run. If the code is executed by SmokePing the id remains constant.

The reason behind this effect is simple: SmokePing forks each probe and reinitializes the random state of each fork using srand. AnotherDNS itself forks again before probing. So each new fork inherits the same starting value for the random generator. Therefore the probe generates identical query ids.

The current version of SmokePing does not have this bug anymore.

Total 1 comments